Delight your audiences as they move through the journey to purchase with creative that matches their intent, aligns with strategic insights.

In the last few years, Voice User Interface (VUI) based personal assistants, like Google Home, Amazon Echo, and Siri have become a familiar part of our daily lives. Thanks to improvements in voice-recognition accuracy, VUIs allow better hands-free operation, especially when we’re not reading. We don’t have to read a screen while we are speaking and listening. For example, interacting with VUI while driving makes certain tasks easier.

At present, VUI and the voice integration movement seem to be growing at a fast pace. Brands and businesses that offer voice recognition for quick information and optimize their local search presence management (e.g. contact info, directions, and hours of operation) stand to gain the most.

Below are three things marketers and brands need to know about the steady progress—and notable limitations—of voice user interface:

Although there are many VUI personal assistants out in the market now, there is one thing that is common across the board: their performance is just dawning on us now. Most VUI assistants need to be programmed and trained to not only respond to diverse languages, questions, and accents (think of the funny Scottish elevator clip) but also handle speech impediments. It will take time and advanced algorithms for VUIs to learn new patterns and work with predictive data, along with better usability optimization.

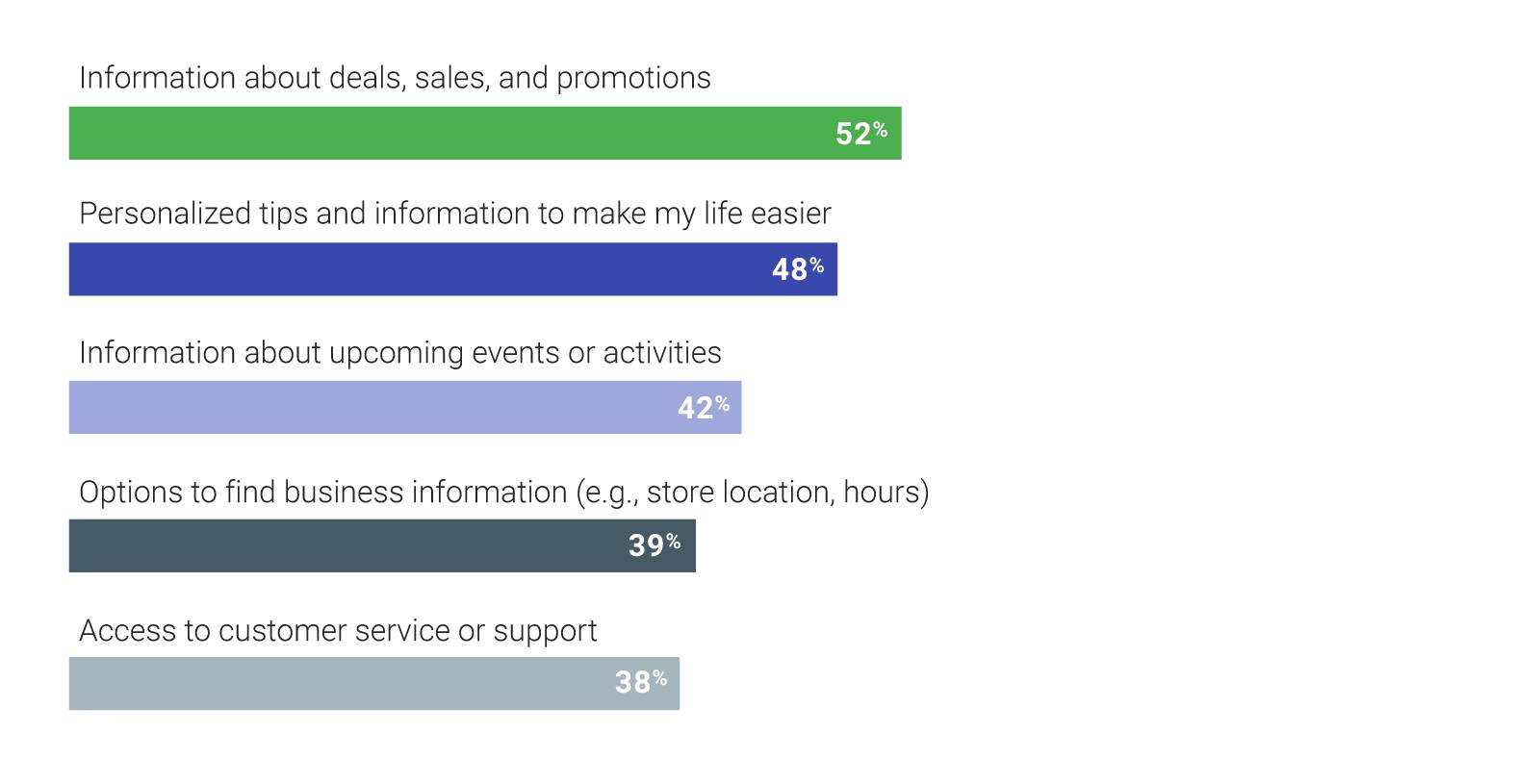

According to Sara Kleinberg, Head of Research & Insights, Marketing at Google, voice assistance is a new playground for brands. People who own voice-activated devices welcome brands as part of the experience. They are open to receiving information that is helpful and relevant to their lifestyle. Here is a chart showing what voice-activated assistant owners would like to receive from brands:

Google/Peerless Insights, “Voice-Activated Speakers: People’s Lives Are Changing,”

Google/Peerless Insights, “Voice-Activated Speakers: People’s Lives Are Changing,”

Subscribe to our monthly newsletter.

VUI alone does not create efficient user experiences. Research suggests that reading is far faster and superior to listening. According to UX Guru, Don Norman, reading can be done quickly: it is possible to read around 300 words per minute and to skim, jumping ahead and back, effectively acquiring information at rates in the thousands of words per minute. By comparison, listening is slow and serial, usually at around 60 words per minute.

Further, removing the functionality of a screen with voice-only interaction can limit the usability of the VUIs as well as increase overall cognitive load and friction for users. Therefore, voice-first integration works best when it converges with reading on a screen-based interaction, creating a more integrated and holistic user experience.

According to our 2018 Voice Search Survey, the types of local information Americans are looking for when using voice assistants are: phone number (68%), directions (67%), and hours of operation of a business (63%). In addition, 36% of consumers are looking for reviews and 43% are using voice assistants to buy something.

In 2017, conducting online searches was the most common task carried out using voice. This provides a strong case for local businesses to optimize local data and content for content for location pages.

In conclusion, VUI is still developing and showing steady growth, gaining more prominence and higher market share within the search engine abilities. It will realize its full potential as it continues to integrate and converge further with screen-based interaction, predictive data, and accuracy in natural language understanding.

Noman Siddiqui is the User-Experience Architect (UX) at DAC, based out of Toronto. To learn more about how we can help enhance UX of your business, contact DAC.

Delight your audiences as they move through the journey to purchase with creative that matches their intent, aligns with strategic insights.

Delight your audiences as they move through the journey to purchase with creative that matches their intent, aligns with strategic insights.

Subscribe to our monthly newsletter.